I started this research with one question in mind: Which image generation model gives creators the best combination of speed, accuracy, quality, and reliability? Although there’s...

In the span of a few short years, artificial intelligence has evolved from a buzzword to a foundational force reshaping how software gets built, brainstormed, debugged,...

The hum of electric motors, the soft whirr of servos and the mesmerizing sight of machines that can lift, carry, see, and even decide are no...

When Google’s Veo 3.1 arrived in early 2026, it marked more than just a series of incremental tweaks. What started as a promising generative video model has...

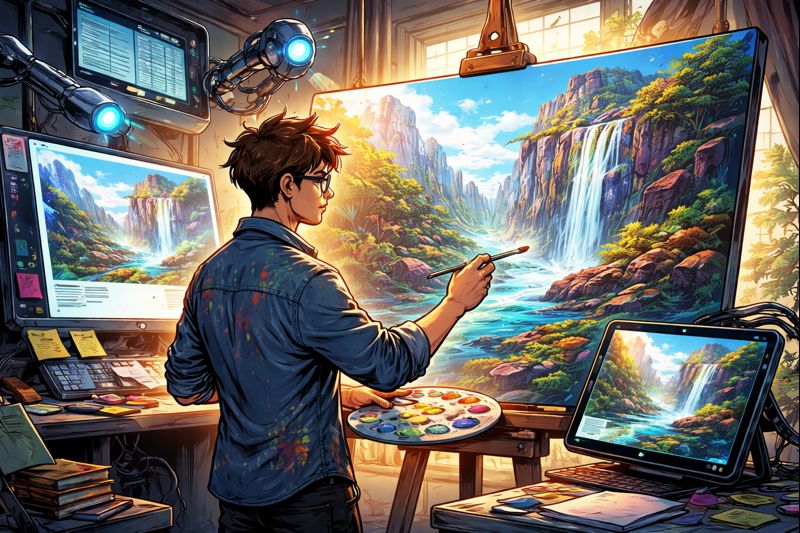

When the Algorithm Became a Brush In a tiny studio perched above a noisy Manhattan street, digital artist Kelly Boesch leans back from her glowing screen...

In the world of artificial intelligence, innovation rarely unfolds in isolation. More often it is shaped by strategic alliances, painful pivots, and the occasional leap of...

As DeepSeek’s next-generation artificial intelligence model nears its rumored launch date, the tech world is holding its collective breath. DeepSeek V4, anticipated to be unveiled during...

A few years ago, I was juggling a demanding job, a side project, and the faint hope of someday becoming my own boss. Like many ambitious...

Spotify is taking a major step into the future of music by partnering with the biggest names in the recording industry to build artificial intelligence tools...

As 2026 unfolds, we’re entering a phase where cutting-edge technologies are no longer just headline fodder but strategic business imperatives and real-world utilities. Last year marked...