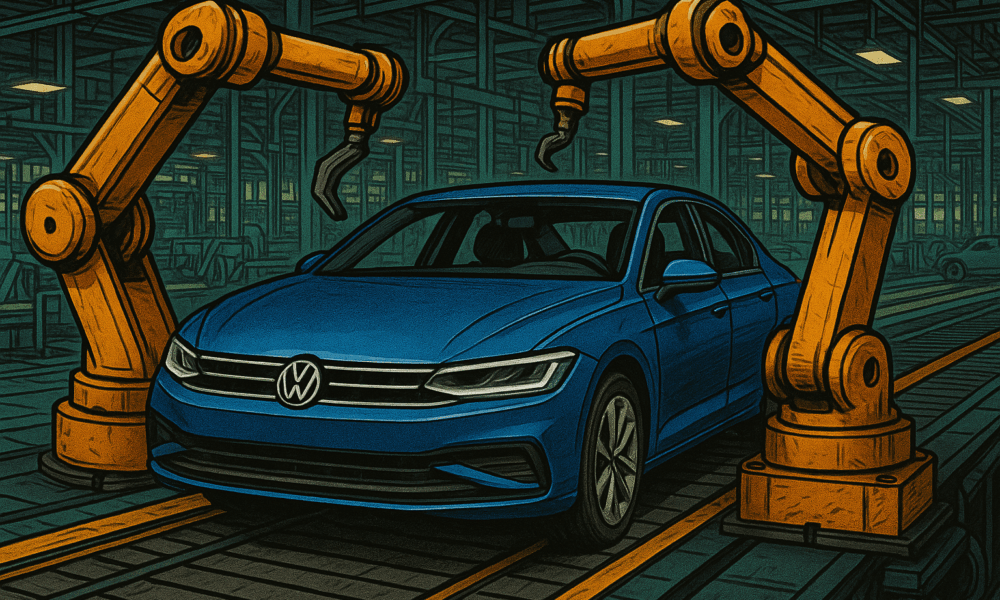

Introduction: Driving Into the Digital Future Once known for perfecting mass production, Volkswagen is now leading a new kind of industrial revolution—one driven not by assembly...

For the first time in OpenAI’s history, its flagship models are now directly available via another major cloud provider—Amazon Web Services. This historic move, announced on...